Resources

This is definitely the best Stable Diffusion Model I have used so far. Around a month ago, I saw this post on the Stable Diffusion subreddit about a new model, and I asked about its capabilities for architectural images. The developer was kind enough to test some prompts because the model wasn’t publicly available then. And the results were looking really promising compared to the other model I use.

Freedom.Redmond

And now, this model is publicly available to use; it’s called Freedom.Redmond. And I want to try it out and compare it with Realistic Vision V2.0. Because as you may know, that’s the model I was using in the last couple of videos.

This new model uses SD 2.1 as the base model compared to 1.5 in the case of Realistic Vision. And it’s a generalist model, so it’s not specifically trained with a single category of images. You can use it to generate basically anything in very different styles, like realistic photos, abstract illustrations, oil paintings, cartoon sketches, etc.

It’s focused on creating images in 1024x1024px size, so keep that in mind for the best possible outcome. But I think anything between 768 to 1024 would be fine.

You can download the model from CivitAI; the link is in the resources section.

But the super cool thing is you can try it right now without downloading anything, even if you don’t have a stable diffusion installed. Because the developer provided a hugging face space for the trial. If you click this link on the model’s page, you can access it.

Hugging Face Space

It is working pretty fast; I have been using it since yesterday, of course, it depends on the demand, but it seems fine for now. I am sure this space won’t be available for a long time, so if it doesn’t work when you watch this, it means the demo period is over. But you can still download it and use it yourself.

You can write your positive and negative prompts here. It’s noted in the description that it’s recommended to try with and without the negative prompt, so feel free to experiment.

There is no recommended prompt structure or trigger words for the model. You can see the example images generated with this model by others and can try to inspire and see what kind of prompt you can use. Some of these images are absolutely insane.

Terracotta Pavilion

Let’s generate our first image. I will try to use the prompts I used previously both in Stable Diffusion and also in Midjourney to be able to compare them.

First, I used this prompt from Midjourney for a private villa with terracotta textures in the desert. In the first generation, I used exactly the same prompts, and then I changed it a bit to change the form. I mostly used the hugging face space first to generate images with this model and meanwhile generated the same prompts with the Realistic Vision on my computer with local Stable Diffusion.

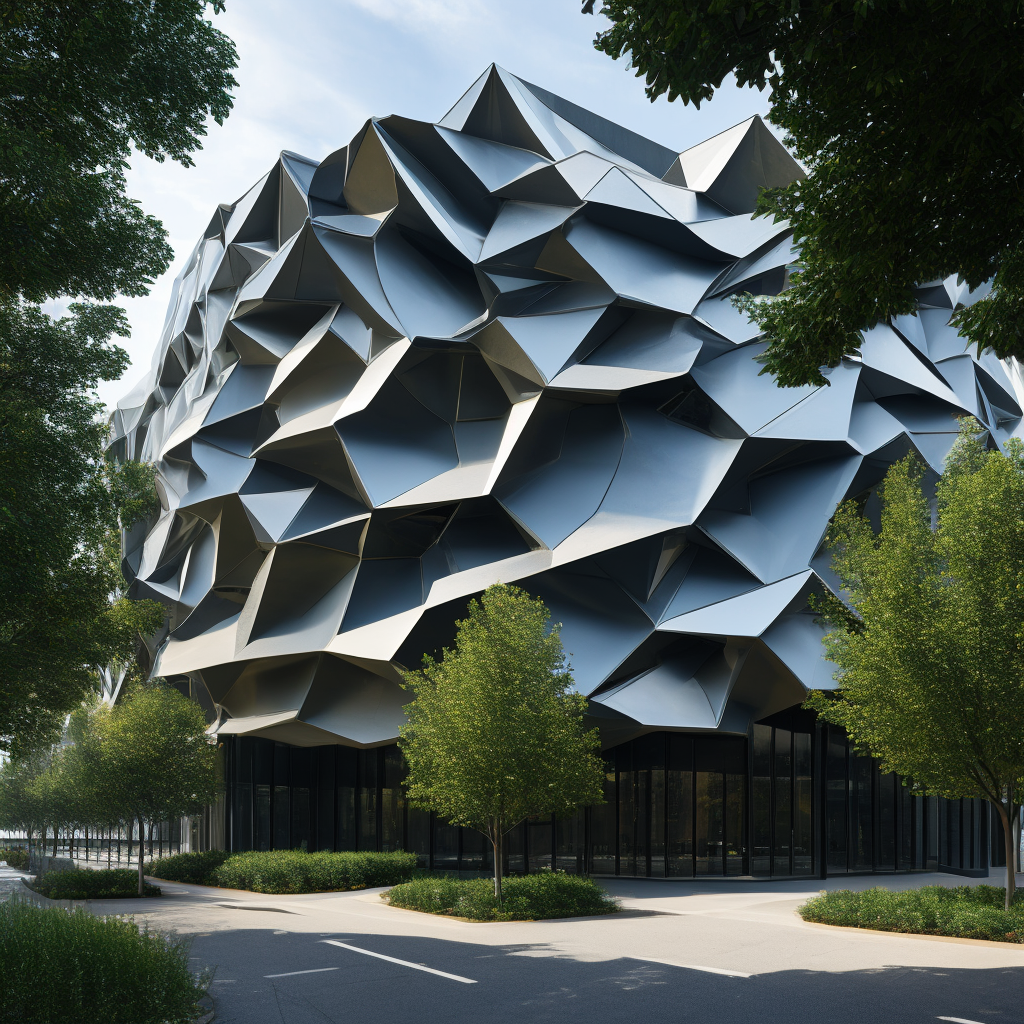

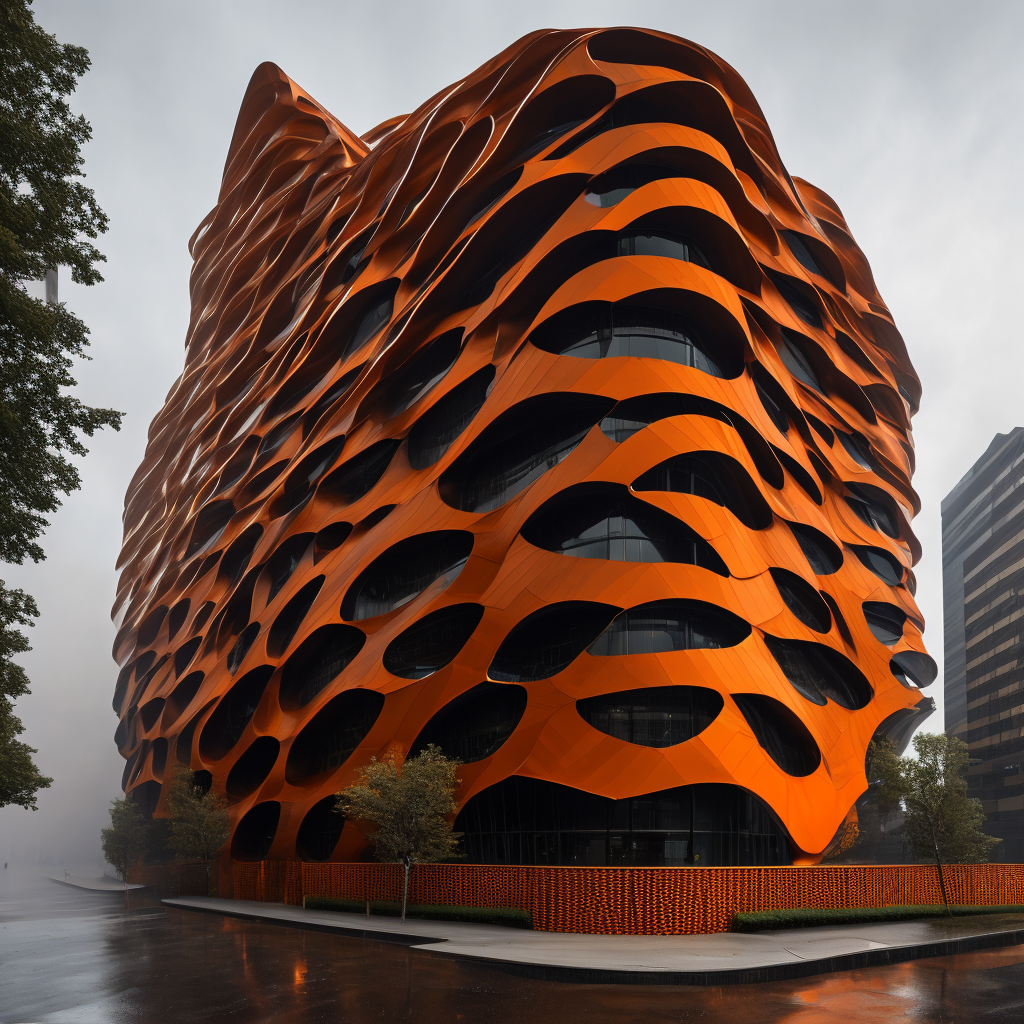

And these images turned out great; I was super shocked about the results because they are almost as good as Midjourney, and I even liked some of them more than Midjourney. The color tones, compositions, depth feeling, and lighting with these soft shadows in this image.

If you use this space, don’t forget to download the image after each generation because if you don’t, there is no way to see that image again, so don’t forget that.

Comparison with Other Platforms

Later I decided to try out some of the prompts from my last video to add this model to the comparison. If you didn’t see that video, I have compared 8 different ai image generators in 5 different categories with more than 800 images. You can check it out here.

And here are some of the images with my favorite ones from other platforms.

For this abstract mushroom Forrest, the results were super detailed, and it generated really high-quality images.

Then I created this top-view urban space design for a public area. Overall, the form of the pavilions it created and the surroundings were way better than I expected. The trees around the park with lighting and buildings with metal roofs look real enough.

Local Installation

If you want to use it locally, after you download the model, you should place it under the Stable Diffusion>Models file.

When I first hit generate, I get this error message. If you receive the same, you can solve it by adding the “—no-half” command to the webui-user.bat file. Right-click it, show more options, and click on edit. Here to the Command Line row, add “—no-half” and save it. Now if you restart your UI and generate it again, it will work.

--no-half

--lowram

--medramI have an RTX 3060 GPU with 6GB VRAM, which is not the best one for this job. I wanted to generate images 1024 to 1024 as it’s suggested by the model description, but then I got a “memory” error.

If you experience this too, you can add the “—lowvram” command to the same command line inside the webui-user.bat file.

This will allow you to run the diffusion with low memory but will dramatically slow down the generation speed. If you have a GPU with a bit higher memory, like 8GB, you can try to use “—medvram” instead of low to speed up the process.

ControlNet 2.1

The one minor issue we have is with the ControlNet extension. For me personally, the ControlNet is one of the most essential parts of the Stable Diffusion. And the main ControlNet models are based on the SD 1.5 version. So it’s not possible to use the same model files directly with this model. But it is possible to download models for the 2.1 version, too, and use ControlNet with this model. Since it’s not as common as the 1.5 version, there are fewer materials and developments for the 2.1 version.

I will share a video about it soon.

Here are all of the final images together to compare. There is a significant quality difference between these two models. Let me know if you use any other model that you think is better; I would love to try it.

Thank you, and see you at the next one!

Just occasional emails with great value!)

Just occasional emails with great value!)